Ignition and Reality Engine

Driving Data into Unreal With Zero Density

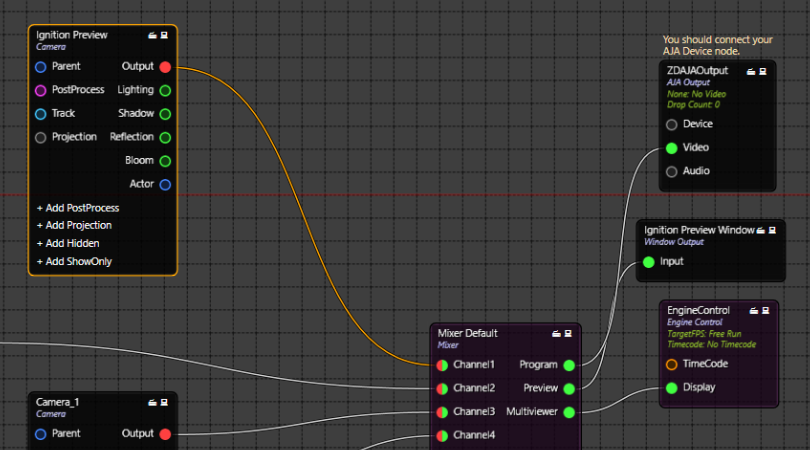

We’re excited to announce the latest technology that we’ve integrated with our Virtual Reality graphics workflow and data integration solution. Zero Density provide a stunning photo-real virtual set experience with real-time graphic capabilities. Combining that with the unparalleled data ingest and graphics control systems of Ignition puts all of that power at the hands of the producer.

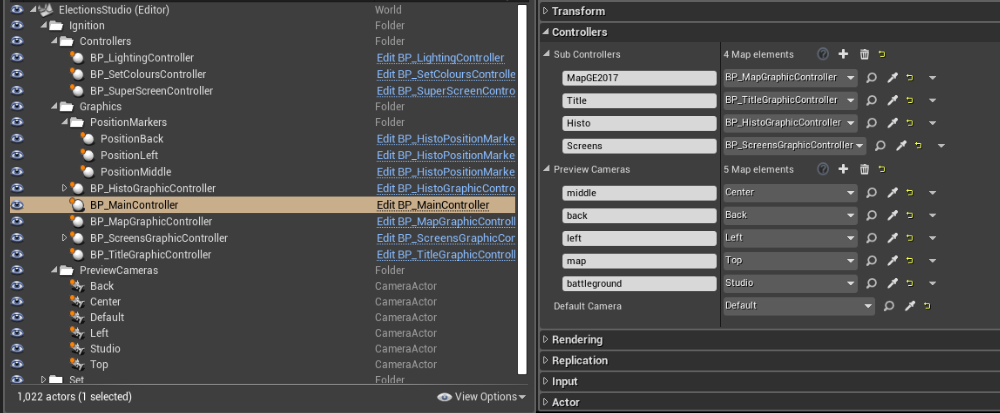

Main Controller

We decided to use our tried and tested “Main Controller – Sub Controller” pattern for putting together a suite of election graphics within a nice looking example virtual set.

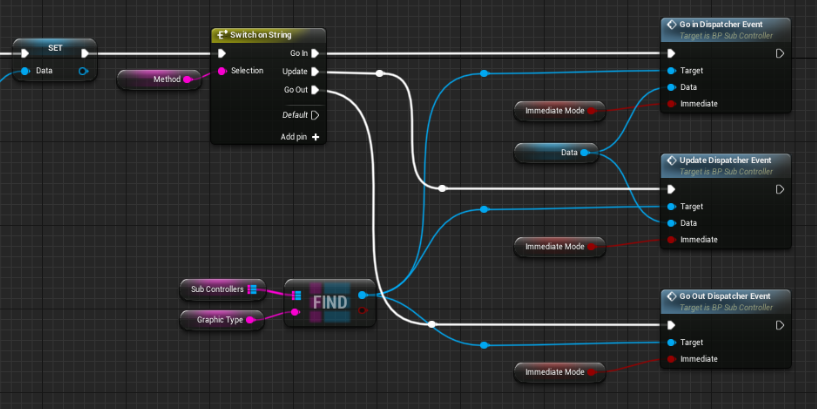

Using blueprints, we created a Main Controller with parameters that could then be configured to know about the distinct graphics in the set.

Each graphic would itself contain blueprints implementing a common interface of events – “Go In”, “Update” and “Go Out”.

The Main Controller would then be the only thing exposed to Ignition via the Zero Density interface – we made a “ZDActor”, and a “ZDFunction” that could be executed from Ignition to pass the required data into Unreal. The Main Controller blueprints would then perform all the relevant transition logic, and use the Sub Controller interface to communicate the required state for each graphic in the set.

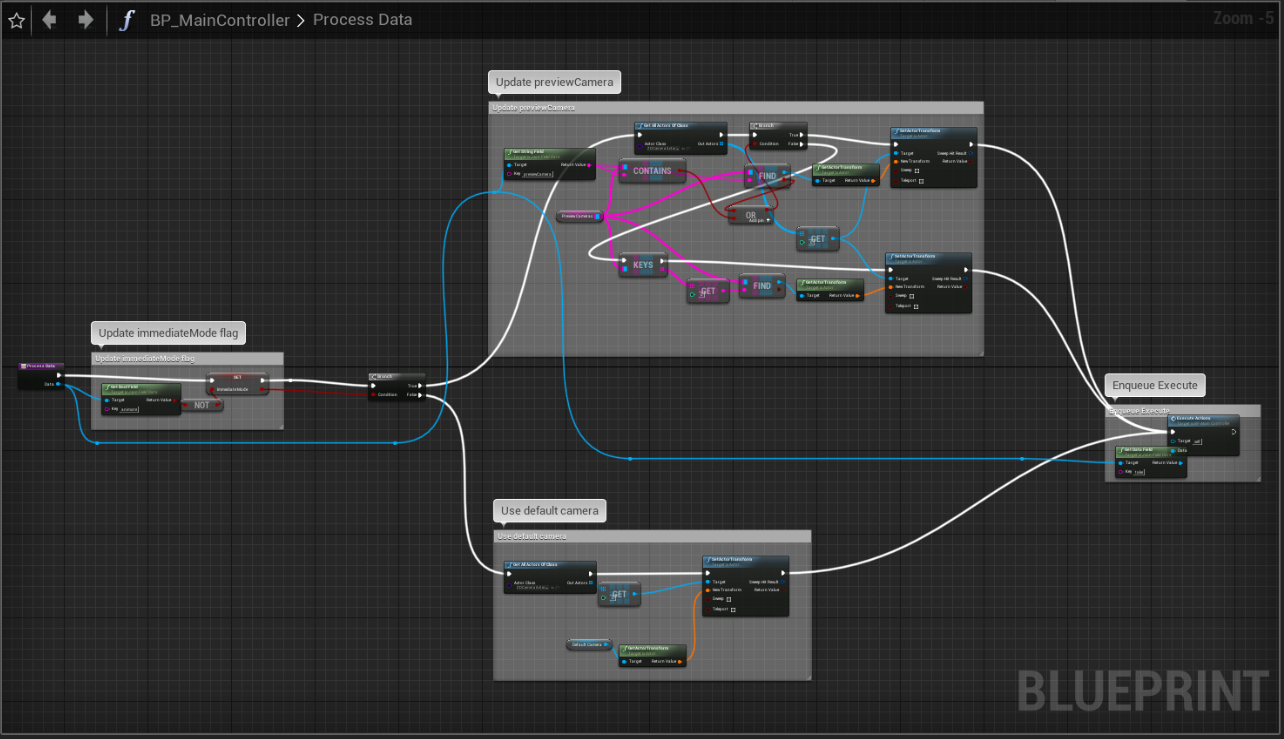

Other responsibilities of the Main Controller were to organise the previewing experience – if Ignition sent data with a flag saying that this was preview data, the Main Controller would switch the scene camera to point towards the graphic that wanted to be previewed, and send the data to that graphic with an “immediate mode” flag. This would tell the graphic to move to its new state immediately, rather than animating, for instant feedback.

For more information about our Main Controller pattern, and how we’ve implemented it in other renderer technologies, see this previous post.

Embedded Preview

By routing the output of an engine to an on-screen window, we were able to capture that window and place it inside Ignition, to provide an embedded preview window.

This allows a much tighter feedback loop when creating a new graphics sequence – Ignition allows you to choose what data goes into the graphics, and tweak how the graphic looks, with instant visual feedback of what that graphic will look like.

Scene Commands

As well as the Main Controller, we were also able to expose other functions through Zero Density, and call them directly from Ignition to affect different things within the virtual set. We use this as a way of providing the director additional actions that can be performed outside the scope of a particular graphics sequence – for example a lighting change, or animating some part of the set to a new position.

These actions are not necessarily something that need to be triggered by a click from the presenter’s clicker (an action that advances the sequence to the next sequence point) – and so are presented separately from the sequence itself, in a bank of useful buttons that can be called upon at will from the gallery.